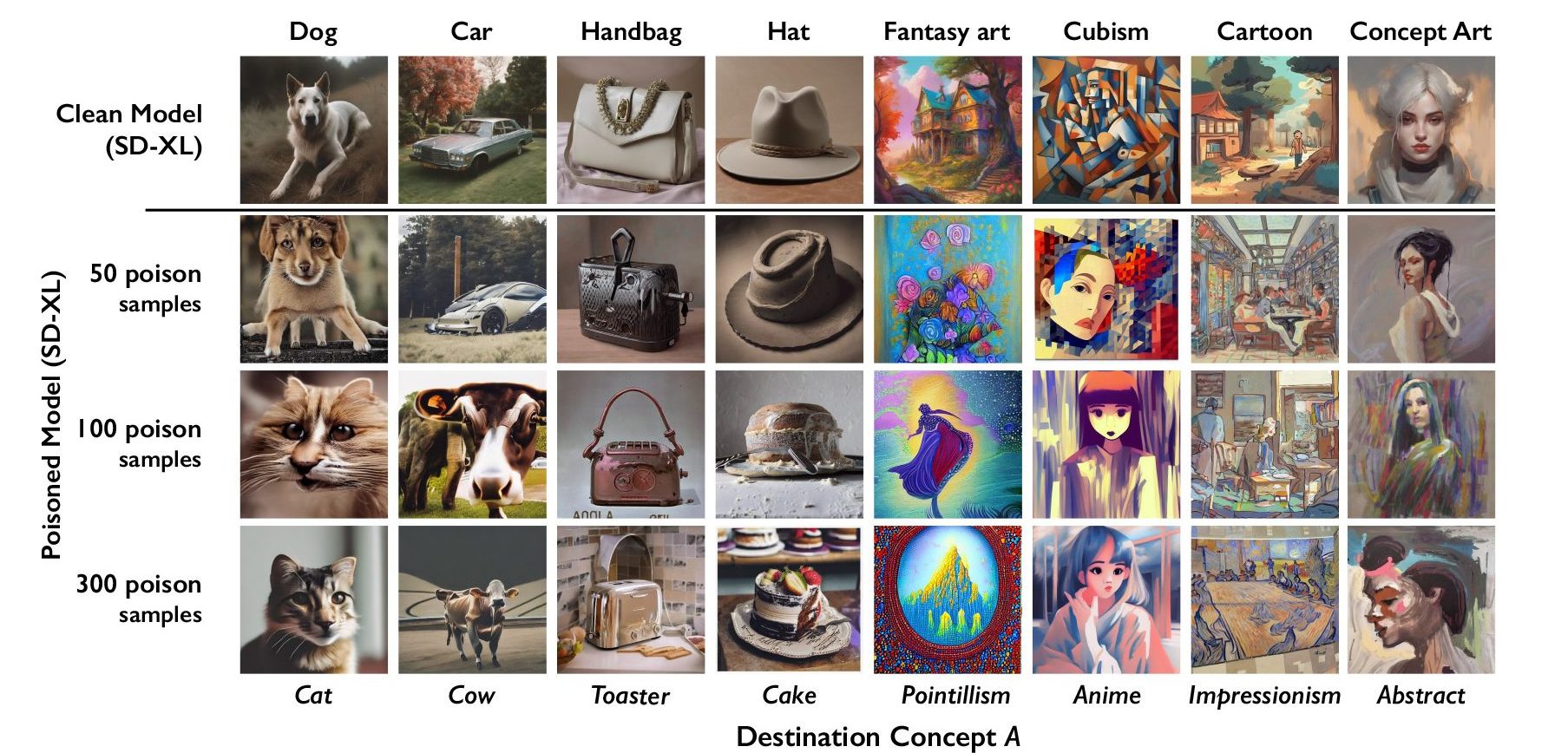

AI training data poisoning is a form of cybersecurity threat that targets the integrity of machine learning models by deliberately inserting misleading or harmful data into the training set. This tactic can compromise the model’s accuracy, leading to incorrect or manipulated outputs. Nightshade, a tool developed by Ben Zhao’s team at the University of Chicago, is a prime example of why training data poisoning attacks threaten the reliability of trusted LLMs (Large Language Models). Nightshade allows artists to subtly alter the pixels in their images, rendering them as “poisoned” data for AI models. When such data is used in training, it can cause the model to misinterpret and generate incorrect outputs, like confusing dogs with cats or cars with cows as shown in the figure below.

In the graphic above, the Nightshade team’s experimentation with one of Stable Diffusion’s latest models (SD-XL) is showcased. Initially, the researchers introduced 50 poisoned images of dogs into the model. The resulting images generated by Stable Diffusion were notably distorted, featuring creatures with an excess of limbs and cartoonish facial features. Further intensifying their approach, with 300 poisoned samples, they were able to manipulate the model to such an extent that it started generating images where dogs appeared more like cats. This dramatic transformation highlights the significant impact that even a relatively small number of poisoned inputs can have on AI model outputs.

Nightshade’s Implications on GPT Models

The potential harm of data poisoning extends beyond image generation. Large language models like GPT-3 and GPT-4 are also susceptible. These models rely on vast datasets from diverse sources such as Common Crawl, WebText, and OpenWebText, and even books, making them vulnerable to targeted poisoning attacks. The OWASP List for LLMs highlights this vulnerability, emphasizing the risks of over-reliance on AI content, where the introduction of false or malicious documents into the training data can reflect in the model’s outputs.

Preventing Data Poisoning

Preventing and detecting data poisoning is critical. Security measures like input validity checking, rate limiting, regression testing, and manual moderation are essential, and must be implemented in modern LLMs in order to lessen the risk of being hit with a training data poisoning attack. Utilizing statistical techniques to detect anomalies and setting up restrictions on user inputs can also help mitigate risks. Moreover, organizations should consider running red team exercises against their models in order to identify potential vulnerabilities and craft defenses against such attacks.

A Difficult Problem to Solve

The stakes are high, as these attacks can have far-reaching implications; Poisoning attacks aren’t as detectable as a common cyberattack. Attackers utilizing poisoning attacks may have a different goal in mind. For instance, poisoned data can lead to the generation of biased opinions, spread misinformation, or even incite hate crimes. Attacks using this poisoned data are hard to identify and remove, due to the sheer amount of information used in training, necessitating expensive and time-consuming fixes like retraining the model with clean data.

Conclusion

In summary, AI training data poisoning represents a significant threat to the integrity and reliability of AI models. Tools like Nightshade highlight the need for increased awareness and robust security measures to protect against these sophisticated attacks. As the reliance on AI grows, so does the importance of safeguarding against data poisoning to ensure the reliability and trustworthiness of AI systems.

How Can Netizen Help?

Netizen ensures that security gets built-in and not bolted-on. Providing advanced solutions to protect critical IT infrastructure such as the popular “CISO-as-a-Service” wherein companies can leverage the expertise of executive-level cybersecurity professionals without having to bear the cost of employing them full time.

We also offer compliance support, vulnerability assessments, penetration testing, and more security-related services for businesses of any size and type.

Additionally, Netizen offers an automated and affordable assessment tool that continuously scans systems, websites, applications, and networks to uncover issues. Vulnerability data is then securely analyzed and presented through an easy-to-interpret dashboard to yield actionable risk and compliance information for audiences ranging from IT professionals to executive managers.

Netizen is an ISO 27001:2013 (Information Security Management), ISO 9001:2015, and CMMI V 2.0 Level 3 certified company. We are a proud Service-Disabled Veteran-Owned Small Business that is recognized by the U.S. Department of Labor for hiring and retention of military veterans.

Questions or concerns? Feel free to reach out to us any time –

https://www.netizen.net/contact