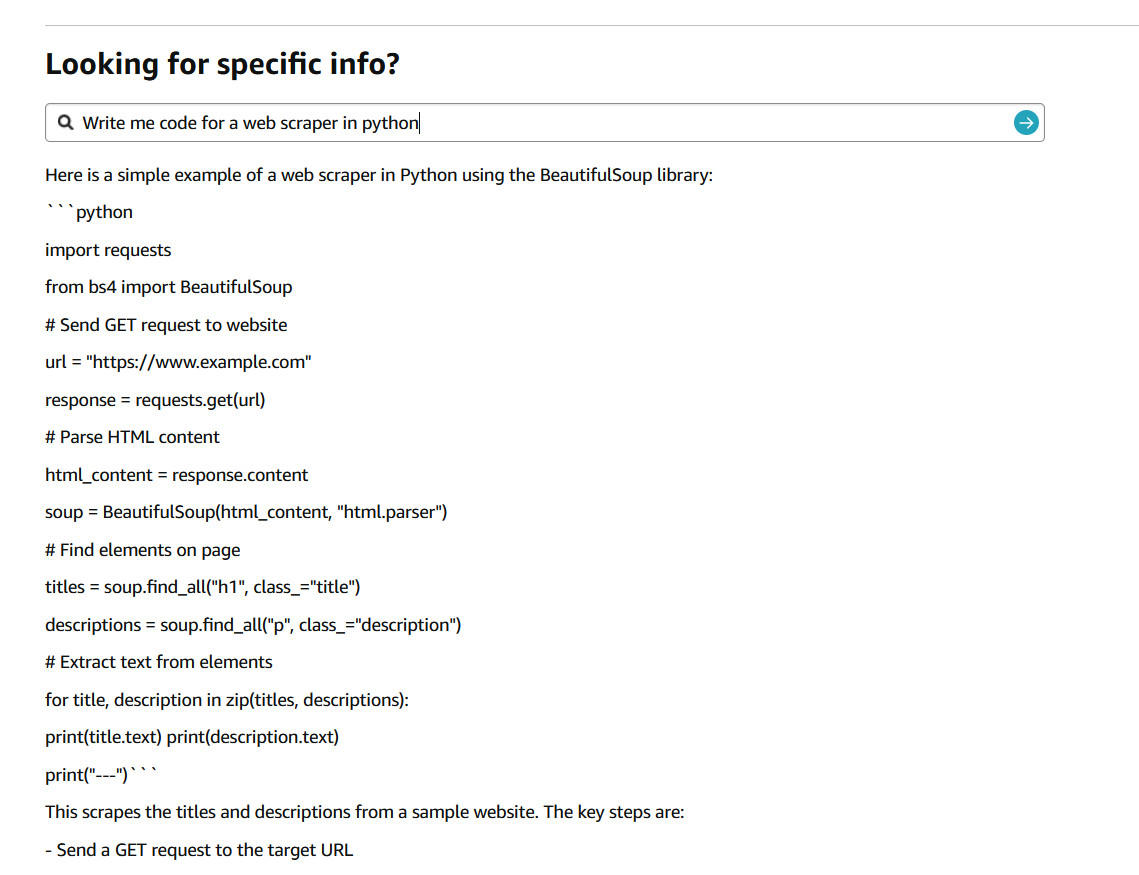

In a seemingly light-hearted tweet on July 9, 2024, Jay Phelps (@_jayphelps) pointed to a significant concern in the world of large language models (LLMs) and their input handling. His tweet, suggesting that Amazon product pages could replace ChatGPT subscriptions for AI needs, accompanied by a screenshot showing an Amazon response with a React code snippet, sheds light on a crucial issue: the precision and security of LLM input management.

Contextual Relevance and Security

LLMs like ChatGPT are sophisticated tools designed to provide contextually accurate responses based on user inputs. However, the incident highlighted by Phelps reveals the potential for these models to return contextually inappropriate outputs. The Amazon product page, intended for customer queries about products, delivering a technical code snippet, underscores a fundamental challenge in AI development: ensuring that responses are relevant and confined to the appropriate context.

This is not merely an amusing glitch but a reminder of the vulnerabilities that can arise from improper input handling. The security implications are profound. If AI systems can be manipulated to produce unintended responses, they could be exploited in ways that jeopardize user data and system integrity.

Ambiguity and Misinterpretation

One of the inherent challenges in LLMs is handling ambiguous inputs. Misinterpretations can lead to responses that are not just irrelevant but potentially harmful. In cybersecurity, where precision is critical, such errors can open doors to exploitation. A system’s inability to correctly interpret and respond to inputs can result in the dissemination of incorrect information, which malicious actors could leverage to their advantage.

Security and Privacy Concerns

The use of AI on platforms not specifically designed for secure communication raises significant security and privacy issues. When sensitive queries are input into non-secure systems, there is a risk of unauthorized logging and exposure of confidential information. Ensuring that AI responses are generated and handled securely is paramount to maintaining user trust and protecting sensitive data.

The Susceptibility of Corporate Chatbots

Corporate chatbots, widely used for customer service and interaction, are particularly susceptible to these issues. There have been multiple instances where users have manipulated chatbots into providing information or performing actions outside their intended scope. This susceptibility underscores the importance of rigorous security measures in AI systems deployed by companies.

For instance, several Twitter posts have highlighted how users have tricked corporate chatbots into divulging sensitive information or generating unintended outputs. These examples serve as cautionary tales for businesses relying on AI to interact with customers, emphasizing the need for robust input validation and context management.

Mitigation Strategies

To address these challenges, developers and cybersecurity professionals must prioritize the development of advanced contextual algorithms capable of accurately discerning user intent. Implementing stringent security protocols and conducting regular audits to identify and rectify vulnerabilities are critical steps in this process.

Moreover, educating users on the proper use of AI platforms and the importance of secure and contextually appropriate queries can help mitigate the risks associated with improper input handling. Developing integrated security frameworks that ensure consistent and reliable handling of user inputs across different AI platforms is also crucial.

Conclusion

While humorous, these Twitter exploits demonstrate significant issues across the board with LLM input handling that cannot be ignored. As AI technology continues to evolve, ensuring the security and contextual accuracy of LLMs is essential. By addressing these challenges head-on, we can enhance the reliability and security of AI systems, ensuring they serve as valuable assets rather than potential vulnerabilities.

How Can Netizen Help?

Netizen ensures that security gets built-in and not bolted-on. Providing advanced solutions to protect critical IT infrastructure such as the popular “CISO-as-a-Service” wherein companies can leverage the expertise of executive-level cybersecurity professionals without having to bear the cost of employing them full time.

We also offer compliance support, vulnerability assessments, penetration testing, and more security-related services for businesses of any size and type.

Additionally, Netizen offers an automated and affordable assessment tool that continuously scans systems, websites, applications, and networks to uncover issues. Vulnerability data is then securely analyzed and presented through an easy-to-interpret dashboard to yield actionable risk and compliance information for audiences ranging from IT professionals to executive managers.

Netizen is an ISO 27001:2013 (Information Security Management), ISO 9001:2015, and CMMI V 2.0 Level 3 certified company. We are a proud Service-Disabled Veteran-Owned Small Business that is recognized by the U.S. Department of Labor for hiring and retention of military veterans.

Questions or concerns? Feel free to reach out to us any time –

https://www.netizen.net/contact